Those last 2 weeks, I finally found time to give Tableau 5.0. Tableau enjoys a stellar reputation among the data visualization community. About a year ago, I saw a live demo of Tableau by CEO and salesman extraordinaire Christian Chabot. Like most of the audience, I was very impressed, not so much by the capacities of the software but by the ease and speed with which insightful analysis seemed to appear out of bland data. But what does it feel on from the user perspective?

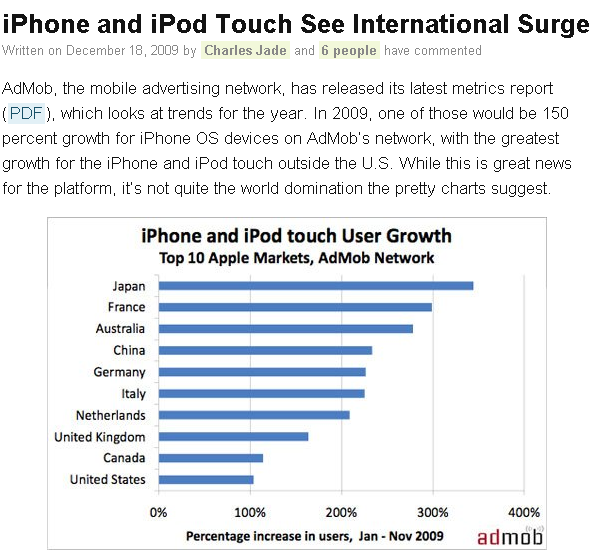

Chartz: ur doing it wrong

Everyone who wrote about charts would pretty much agree that the very first step in making one is to decide what to show. The form of the display is a consequence of this choice.

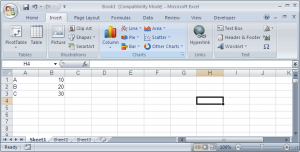

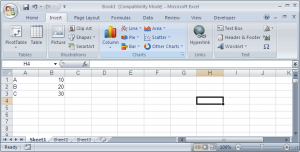

Most software got this wrong. They will ask you how you want your display to look like, then ask you for your data. Take this screenshot from Excel:

When you want to insert a chart, you must first choose what kind of chart (bar, line, column, pie, area, scatter, other charts) and one of its sub-types. You are not asked, what data does this apply to, and what that data really is. You are not asked, what you are trying to show through your chart – this is something you have to manage outside of the software. You just choose a chart.

I’m picking Excel because with 200m users, everyone will know what I’m talking about, but virtually all software packages ask the user to choose a rather rigid chart type as a prerequisite to seeing anything, despite overwhelming theoretic evidence that this approach is flawed. In Excel, like in many other packages, there is a world of difference between a bar chart and a column chart. They are not of the same nature.

A reverted perspective

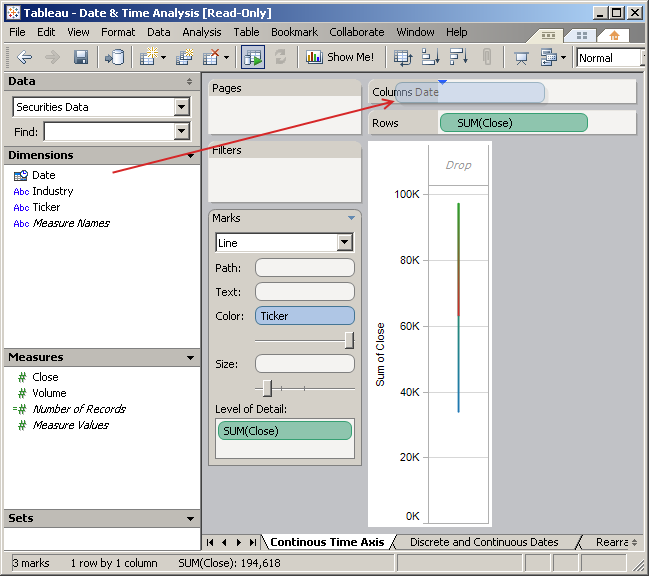

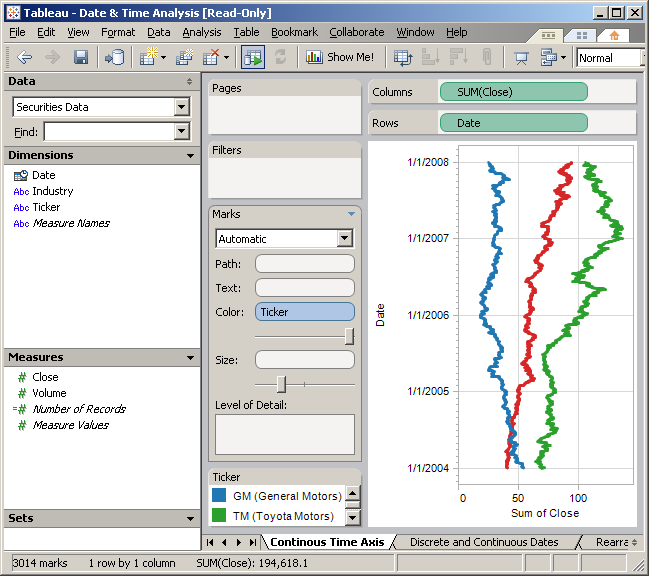

Fortunately, Tableau does it the other way round. When you first connect with your data in Tableau, it distinguishes two types of variables you can play with: dimensions and measures. And measures can be continuous or discrete.

(This is from an example file).

(This is from an example file).

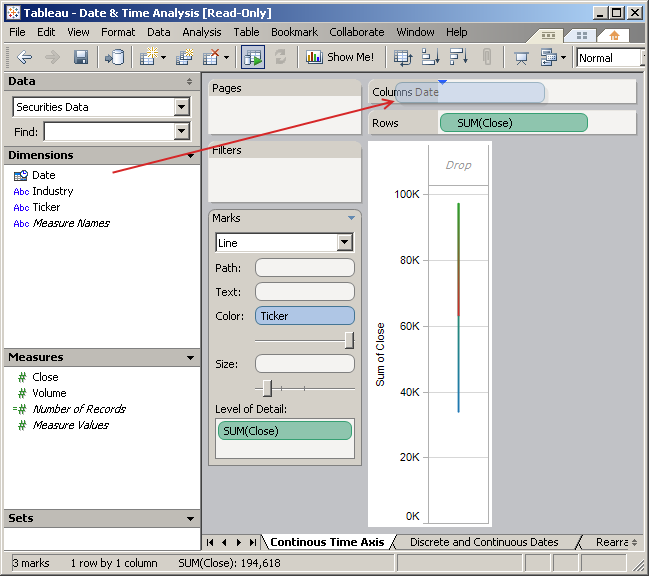

Then, all you have to do is to drag your dimensions and your measures to the center space to see stuff happening. Let’s drag “close” to the rows…

We already see something, which is not terribly useful but still. Now if we drag Date into the columns…

We already see something, which is not terribly useful but still. Now if we drag Date into the columns…

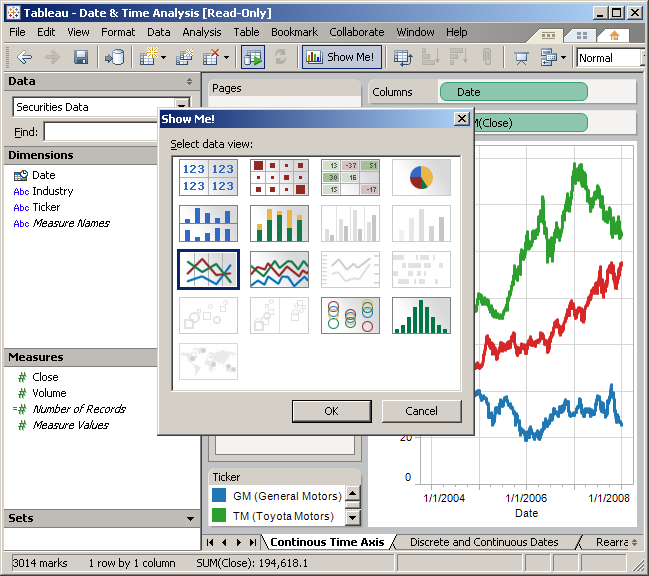

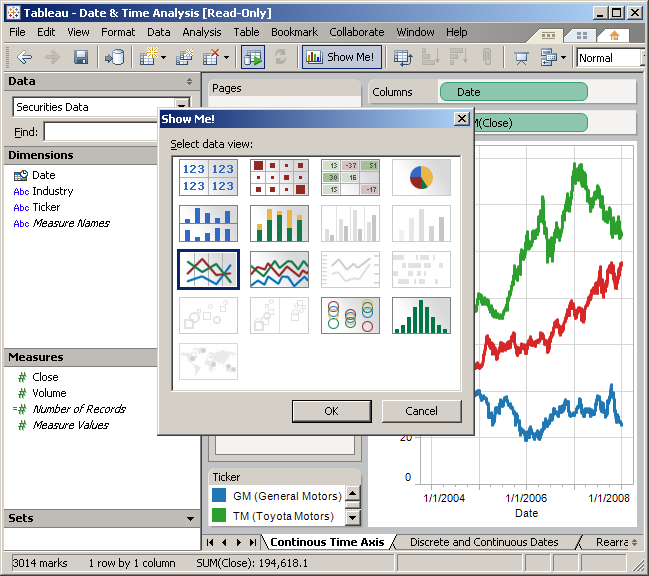

Instant line chart! the software found out that this is the type of representation that made the most sense in this context. You’re trying to plot continuous variables over time, so it’s pretty much a textbook answer. Let’s suppose we want another display: we can click on the aptly name “show me!” button, and:

These are all the possible representations we have. Some are greyed out, because they don’t make sense in this context. For instance, you need to have dimensions with geographic attributes to plot things on a map (bottom left). But if you mouse over one of those greyed out icons, you’ll be told why you can’t use them. So we could choose anything: a table, a bar chart, etc.

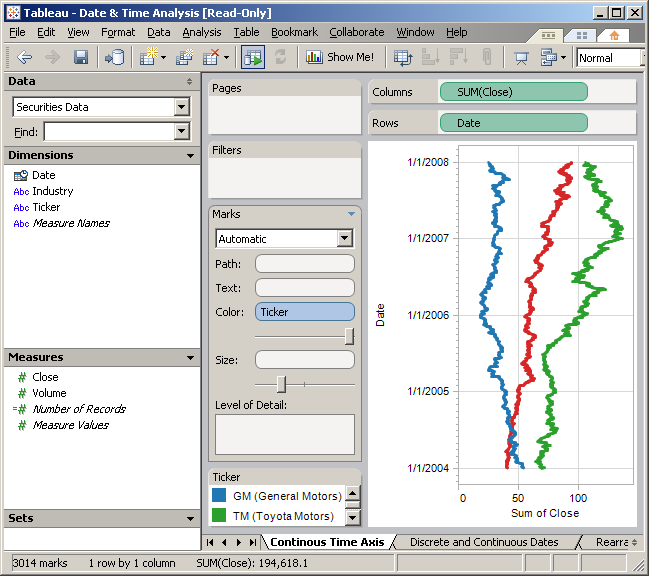

A simple thing to do would be to switch rows and columns. What if we wanted to see date vertically and the close horizontally? Just drag and drop, and:

Crafting displays

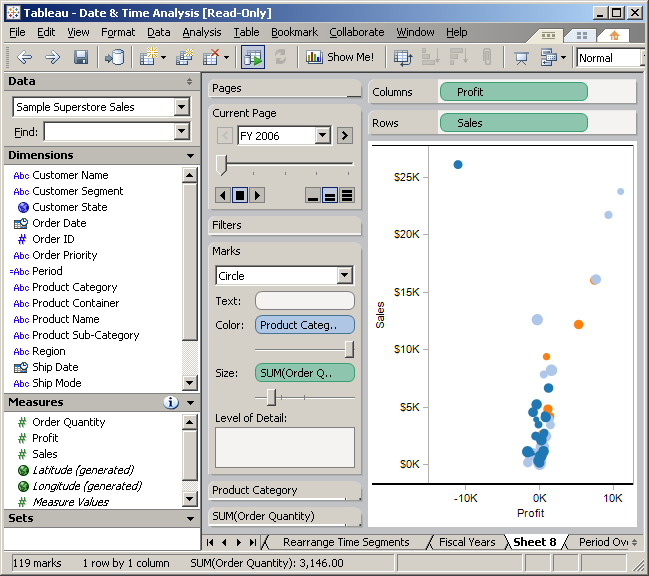

Gone are the frontiers between artificial “chart types”. We’re no longer forcing data into preset representations, rather, we assign variables (or their automatic aggregation, more on that shortly) to possible attributes of the graph. Rows and columns are two, which shouldn’t be taken too literally – in most displays, those would be better described as abcissa and ordinate – but all the areas in light grey (called “shelves”) can welcome variables : pages, filters,path, text, color, size, level of detail, etc.

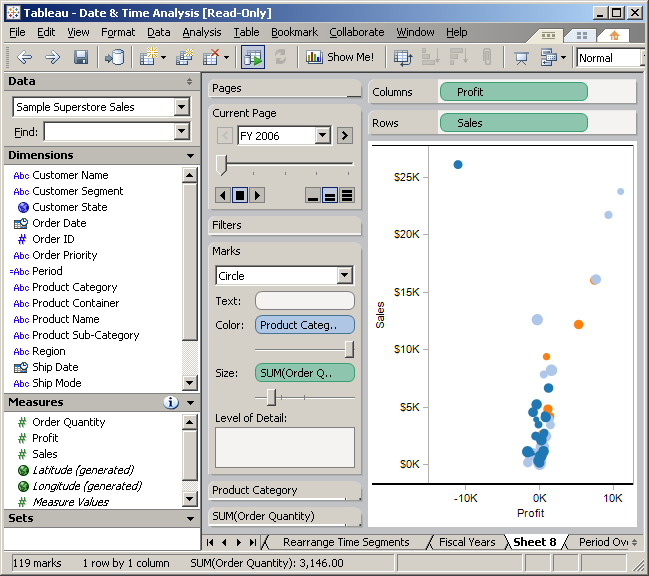

Here’s an example with a more complex dataset. Here, we’re looking at sales figures. We’re plotting profit against sales. The size of the marks correspond to the volume of the order, and the colour, to their category. Results are presented year by year. It is possible to loop through the years. So this display replicates the specs of the popular Trendalyzer / Motion chart tool, only simpler to set up.

Note that as I drag variables to shelves, Tableau often uses an aggregation that it thinks makes more sense. For instance, as I dragged Order Date to the page shelf, Tableau picked the year part of the date. I could ask the program to use every value of the date, the display will be almost empty but there would be a screen for each day. Likewise, when I dragged Order Quantity to the Size shelf, Tableau chose to use the sum of Order Quantity instead. Not that it makes much of a difference here, as each bubble represents only one order. But the idea is that Tableau will automatically aggregate data in a way that makes sense to display, and that this can always be overridden.

But if I keep the data for all the years in the display, I can quickly see the transactions where profit was negative.

And I can further investigate on this set of values.

And I can further investigate on this set of values.

So that’s the whole idea. Because you can assign any variable to any attribute of the visualization, in the Tableau example gallery you can see some very unusual examples of displays.

Using my own data

When I saw the demos, I was a little skeptical of the data being used. I mean, things were going so smoothly, evidence seemed to be jumping at the analyst, begging to be noticed. Tableau’s not bad at connecting with data of all forms and shapes, so I gave it a whirl with my own data.

Like a lot of other official data providers, OECD’s format of choice for exporting data is SDMX, a flavor of XML. Unfortunately, Tableau can’t read that. So the next easiest thing for me was Excel.

I’m not going to get too much into details, but to come up with a worksheet that Tableau liked with more than a few tidbits of data required some tweaking and some guessing. The best way seems to be: a column for each variable, dimensions and dates included, and don’t include missing data (which we usually represent by “..” or by another similar symbol).

Some variables weren’t automatically reckognized for what they were: some were detected as dimensions when they were measures, date data wasn’t processed that well (I found that using 01/01/2009 instead of 2009 or 1/2009 worked much better). But again, that was nothing that a little bit of tweaking couldn’t overcome.

On a few occasions, I have been scratching my head quite hard as I was trying to understand why I could get Y-o-Y growth rates for some variables, but not for some others, or to make custom calculated fields. Note that there are plenty of online training videos on the website. I found myself climbing the learning curve very fast (and have heard similar statements of recent users who quickly felt empowered) but am aware that practice is needed to become a Tableau Jedi. What I found recomforting is that without prior knowledge of the product, but with exposure to data design best practices, almost everything in Tableau seems logical and simple.

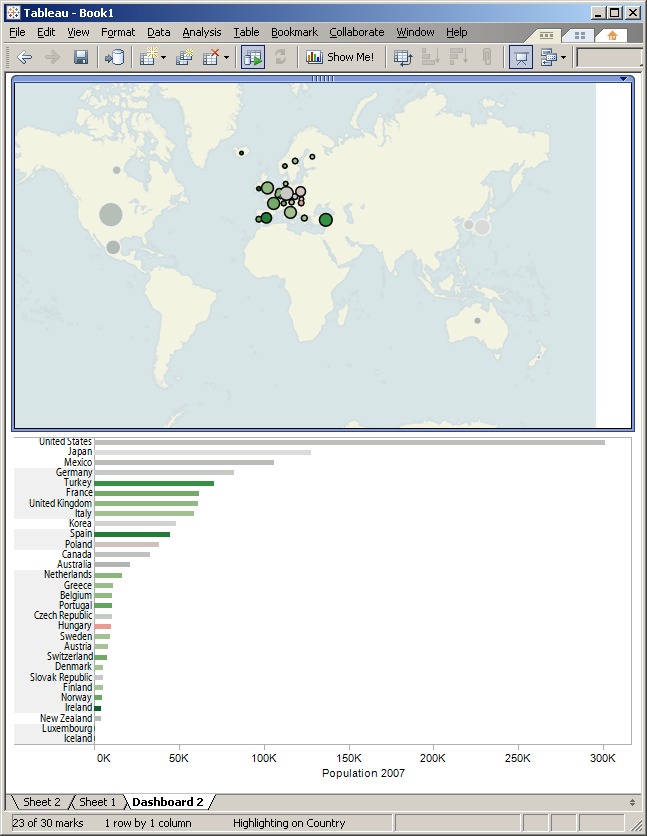

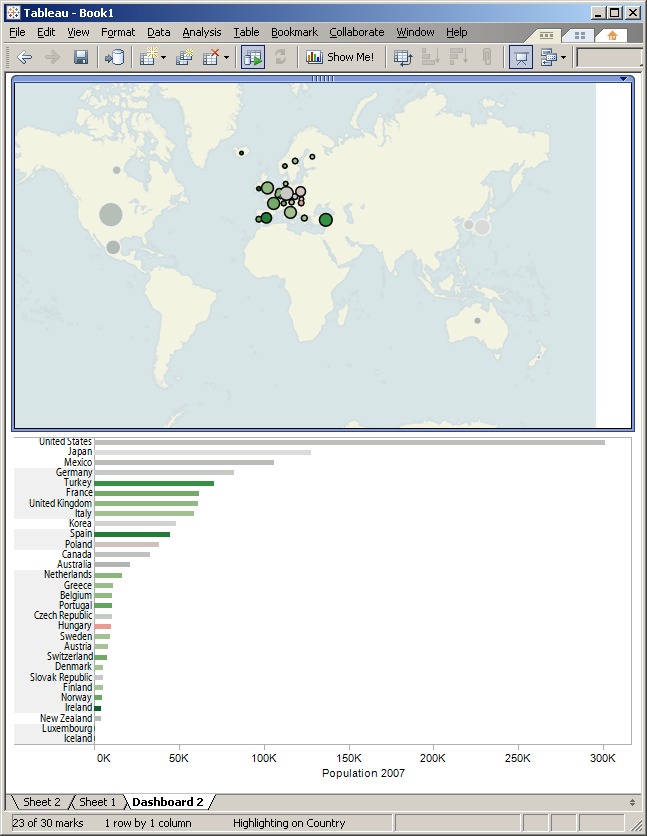

But anyway – I was in. Here’s my first Tableau dashboard:

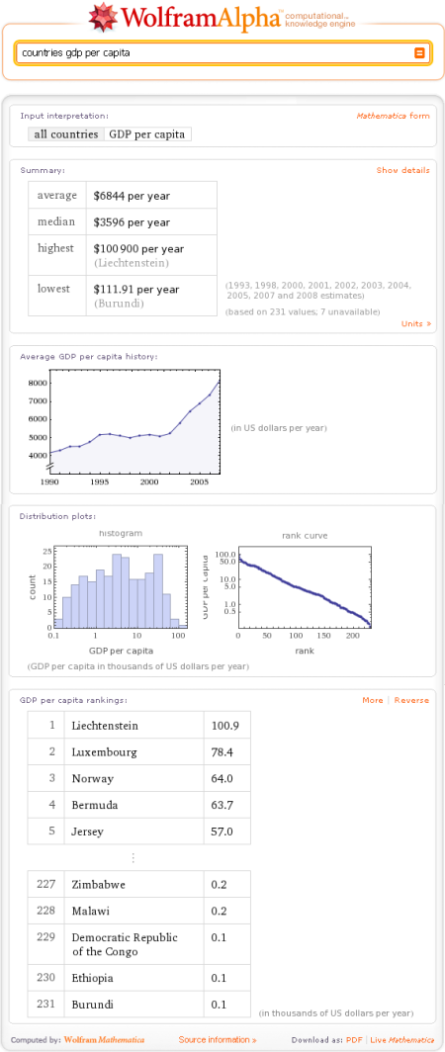

A Dashboard is a combination of several displays (sheets) on one space. And believe me, it can become really sophisticated, but here let’s keep it simple. The top half is a map of the world with bubbles sized after the 2007 population of OECD countries. The bottom half is the same information as a bar chart, with a twist: the colour corresponds to the population change in the last 10 years. So USA (green) have been gaining population while Hungary has seen its numbers decrease.

A Dashboard is a combination of several displays (sheets) on one space. And believe me, it can become really sophisticated, but here let’s keep it simple. The top half is a map of the world with bubbles sized after the 2007 population of OECD countries. The bottom half is the same information as a bar chart, with a twist: the colour corresponds to the population change in the last 10 years. So USA (green) have been gaining population while Hungary has seen its numbers decrease.

I’ve created an action called “highlighting on country” to link both displays. The best feature of these actions is that they are completely optional, so if you don’t want to have linked displays, it is entirely up to you and each part of the dashboard can behave independantly. You can also bring controls to filter or animate data which I left out for the sake of simplicity. However, you can still select data points directly to highlihght them in both displays, like this:

Here I’ve highlighted the top 5 countries. The other ones are muted in both displays. Here my colour choice is unfortunate because Japan and Germany, which are selected, don’t look too different from the other countries. Now I can select the values for the countries of Europe:

Here I’ve highlighted the top 5 countries. The other ones are muted in both displays. Here my colour choice is unfortunate because Japan and Germany, which are selected, don’t look too different from the other countries. Now I can select the values for the countries of Europe:

And you’ll see them highlighted in the bottom pane.

Display and style

Representing data in Tableau feels like flipping the pages of a Stephen Few book, which is more than coincidiential as he is an advisor to Tableau. From my discussion with the Tableau consultant that called me, I take that Tableau takes pride in their sober look and feel, which fervently follows the recommendation of Tufte, and Few. I remember a few posts from Stephen’s blog where he lashed as business intelligence vendors for their vacuous pursuit of glossiness over clarity and usefulness. Speaking of Few, I’ve upgraded my Tableau trial by re-reading his previous book, Information Dashboard Design, and I could really see where his philosophy and that of Tableau clicked.

So there isn’t anything glossy about Tableau. Yet the interface is state-of-the-art (no more, no less). Anyone who’ve used a PC in the past 10 years can use it without much guessing. Colours of the various screen elements are carefully chosen and command placement makes sense. Most commands are accessible in contextual menus, so you really feel that you are directly manipulating data the whole time.

When attempting to create sophisticated dashboards, I found that it was difficult to make many elements fit on one page, as the white space surrounding all elements becomes incompressible. I tried to replicate displays that I had made or that I had seen around, I was often successful (see motion chart reproduction above) but sometimes I couldn’t achieve the level of customization that I had with visualizations which are coded from scratch in Tableau. Then again even Tableau’s simplest representations have many features and would be difficult to re-code.

Sharing data

According to Dan Jewett, VP of product development at Tableau,

“Today it is easier to put videos on the Web than to put data online.”

But my job is precisely to communicate data, so I’m quite looking forward this state of affairs to change. Tableau’s answer is twofold.

The first half is Tableau Server. Tableau Server is a software that organizes Tableau workbooks for a community so they can access it online, from a browser. My feeling is that Tableau Server is designed to distribute dashboards within an organization, less so with the anyone on the internet.

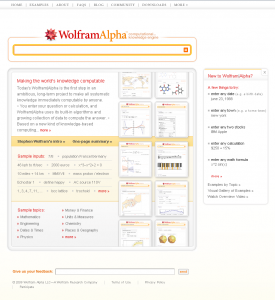

That’s where the second part of the answer, Tableau Public, comes into play. Tableau Public is still in closed beta, but the principle is that users would have a free desktop applications which can do everything that Tableau Desktop does, except saving files locally. Instead, workbooks would have to be published on Tableau servers for the world to see.

There are already quite a few dashboards made by Tableau Public first users around. See for instance How Long Does It Take To Build A Technology Empire? on one of the WSJ blogs.

Today, there is no shortage of tools that let users embed data online without technical manipulations. But as of today, there is no product that could come close to this embedded dashboard. Stephen McDaniel from Freakalytics notes that due to Tableau’s technical choices (javascript instead of flash), dashboards from Tableau Public can be seen in a variety of devices, including the iPhone.

I’ve made a few dashboards that I’d be happy to share with the world through Tableau Public.

This wraps up my Tableau review. I can see why the product has such an enthusiastic fan base. People such as Jorge Camoes, Stephen Few, Robert Kosara, Garr Reynolds, Nathan Yau, and even the Federal CIO Vivek Kundra have all professed their loved for the product. The Tableau Customer Conference, which I’ve only been able to follow online so far, seems to be more interesting each year. Beyond testimonies, the gallery of examples (again at http://www.tableausoftware.com/learning/examples, but do explore from there to see videos and white papers), still in the making, shows the incredible potential of the software.