If you are using computers for work, chances are that you are spending a good portion of your day with Microsoft products such as the Office suite. Some hate it, some love it, but to hundreds of millions it’s part of our daily lives and its design choices affect how we think and work in a much more profound way than we are aware of. So, the release of a new version of Office is always a significant event.

I’ve just installed Office 2010 and here are my first impressions.

The UI is rationalized.

The UI is rationalized.

The interface will be familiar to Office 2007 users – they are still using the ribbon. Only a few buttons have been added to the applications I’ve tested, and the others have fortunately not moved since the previous version. However, the ribbon’s colours have been muted to a conservative white to grey gradient, which is much easier on the eyes. The added benefit is to make highlighted sections of the ribbon stand out much more efficiently.

Highlighting a section works much better against a sober gray than against a vivid blue.

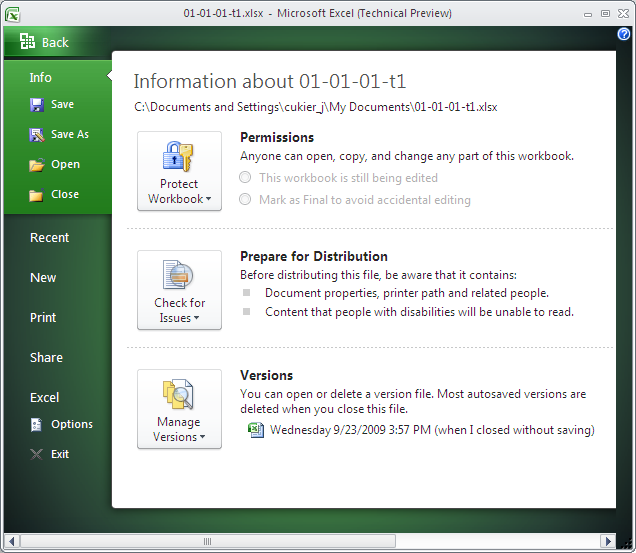

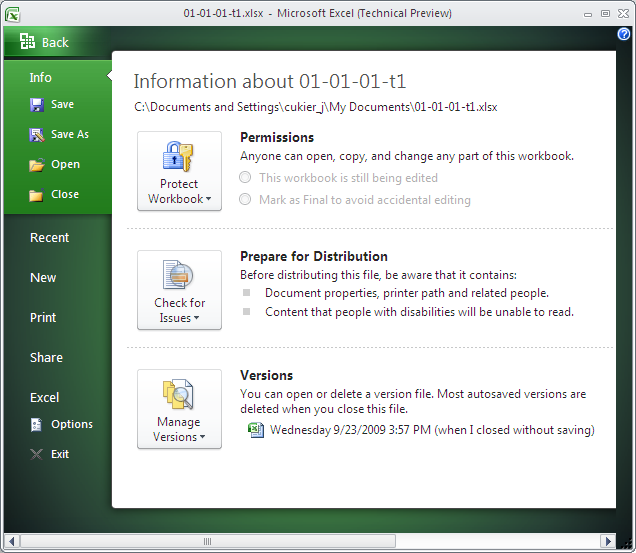

The one button that changed was the top-left Office button. Frankly, what it was for was obvious to no-one in Office 2007. Due to its appearance, it wasn’t really clear that it was clickable, and the commands it gave access to were a mixed bunch – file control, program options, printing, document properties… which, before, were not in the same top-category.

This new area is called "Office backstage" and is a welcome change to the akward "file" menu or office button from previous versions.

In Office 2010, the Office button is still there, but this time, it looks like a button and is much more inviting. This time, it presents the user with the various commands on a separate screen. That way, commands are well-categorized, and there is ample space for UI designers to explain those commands which are not clear. This had not been possible when all those commands were forced to fit in one tiny menu.

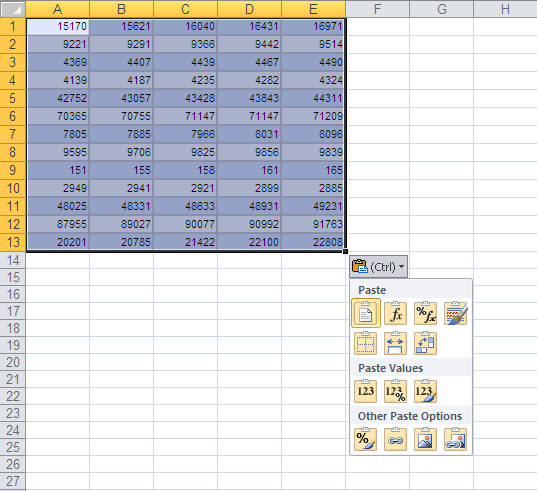

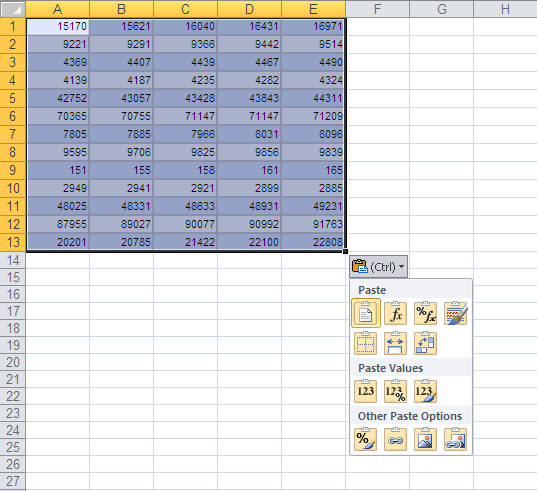

Another thing that jumped at me when I started manipulating the programs were the improvement in the copy/paste interface. It’s fair to say that pasting has always been a very time-consuming task. It had never been easy, for instance, to paste values only or to keep source formatting, without having to open menus and choose options which require time and effort. Besides, some pasting options descriptions are cryptic and a bit daunting, so novice users aren’t encouraged to use them for fear of what might happen.

I've been using Excel for about 15 years so I know my way around. But improvement in the paste interface directly translates into productivity gains.

Now the various pasting options are promoted within the contextual menu – they are big icons, and it is possible to preview how pasted material would look before pasting. The best part is that these commands are now accessible via native keyboard shortcuts, so we no longer need a string of 4 mouse clicks, or having to key in alt+E, V, S, enter alt + H, V, S, V, enter in sequence. After a normal paste (ctrl +V) you can hold control and choose a one key option, such as V for values, T for transposing, etc. Much better.

Changes in the Excel chart engine

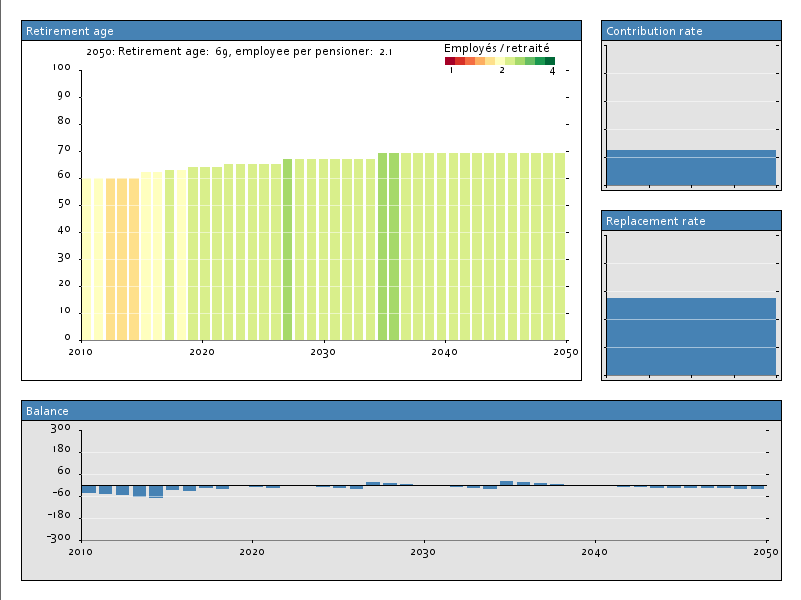

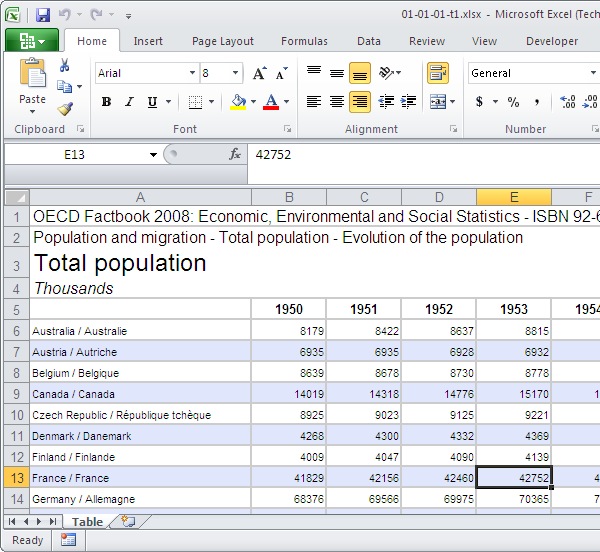

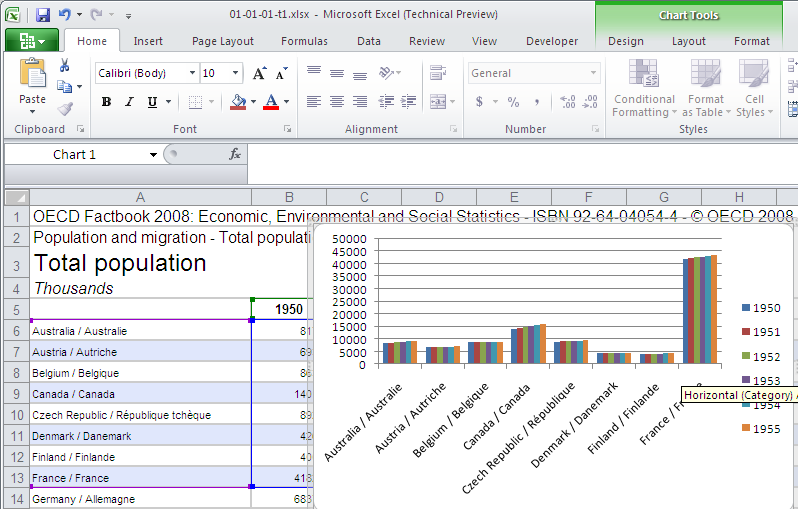

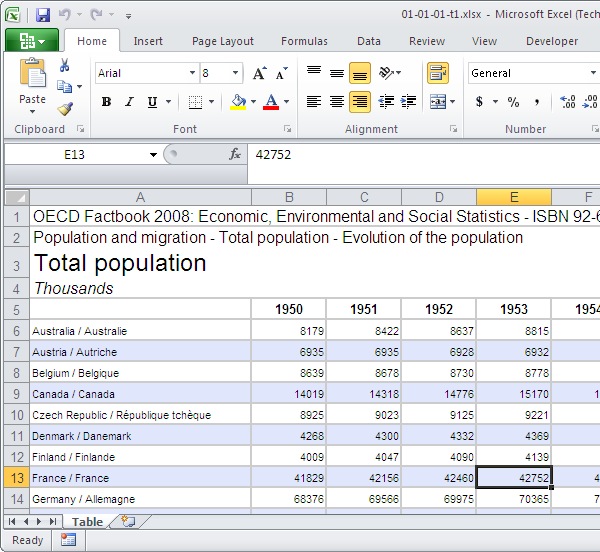

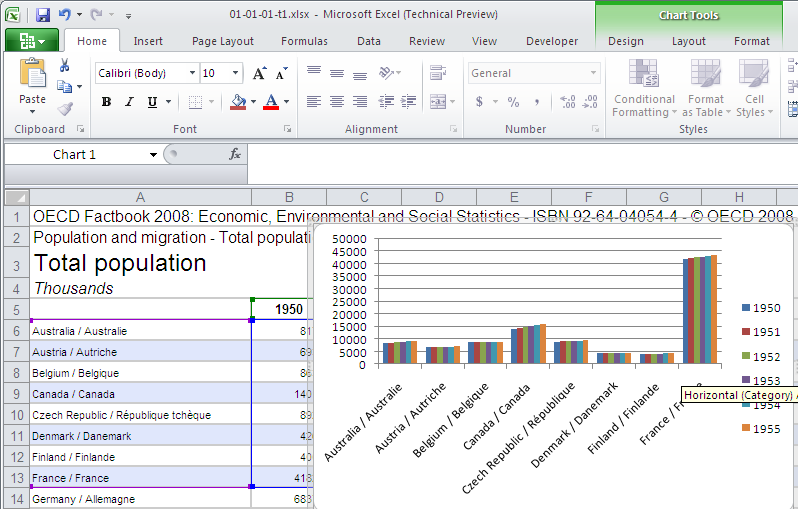

There are 3 ways in Excel to represent numbers graphically: charts proper, pivot charts and sparklines.

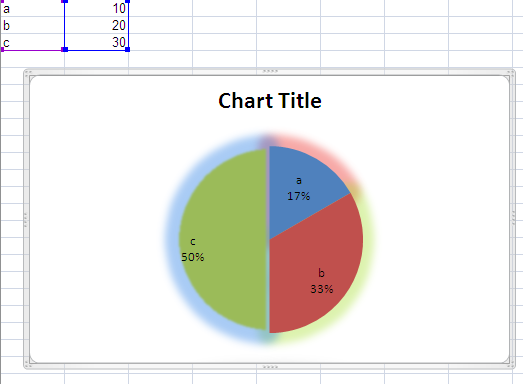

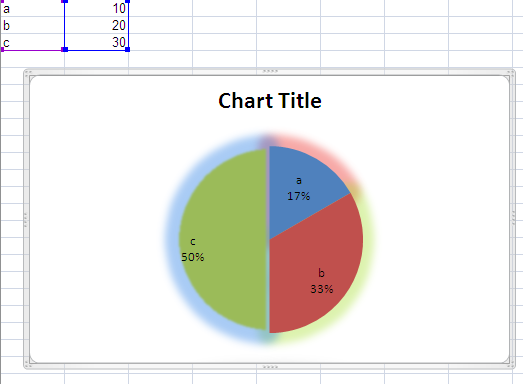

Charts and pivot Charts didn’t see much improvement since the previous version of Excel. The formatting options move along in the direction initiated by Excel 2007: in addition to the controversed 3-D format set of options, users now have an advanced “shadow” and “glow and soft edges” submenus to spice up their charts. The interface for designing gradient fills has been upgraded. The underlying functionality remains unchanged but it is now easier to control. However, the pattern fill option returns, which is great news for people who print their graphs in B&W.

Even more complex formatting options mean a greater chance to use them poorly

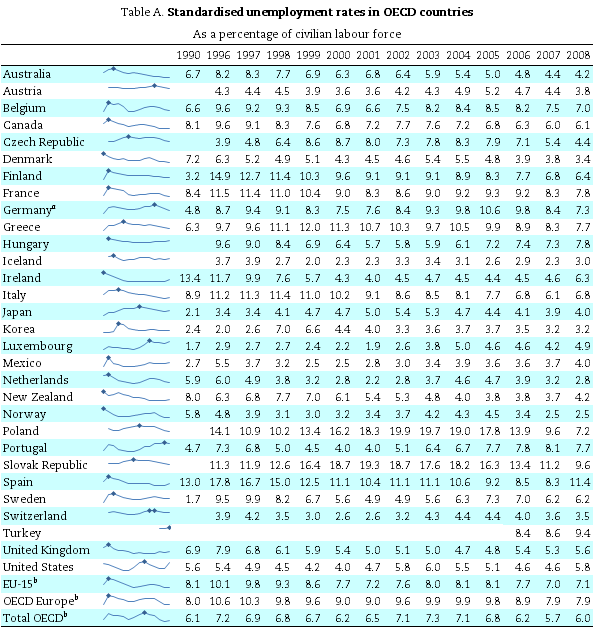

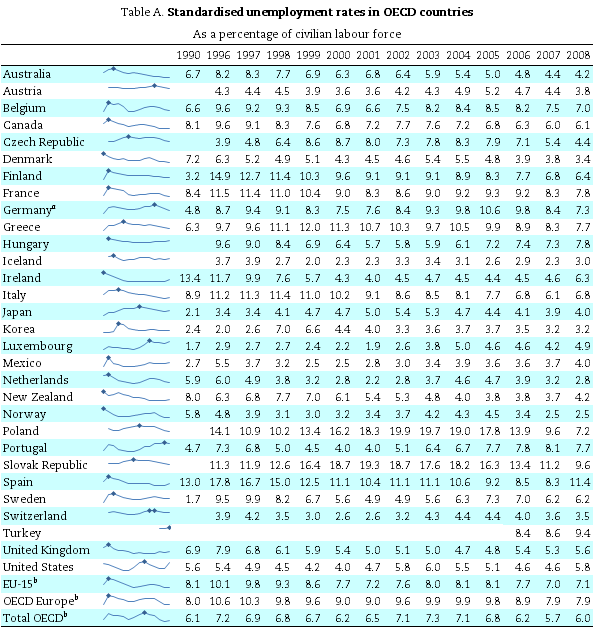

Sparklines are the real innovation of Excel 2010. Sparklines are a minimalist genre of chart that has been designed to fit in the regular flow of the text – they don’t require more space to be legible and efficient. While sparklines do not allow a user to look up the value of a specific data point, they are very efficient for communicating a trend. As such, they are increasingly used in dashboards and reports. There has been 3rd-party solutions to implement them in Excel but this native implementation is robust and well done. This will put sparklines on the radar for the great number of people who didn’t use them because they were not aware of their existence.

Sparklines give immediate insight on the trends in this data table. A dot marks when the maximum value was reached. That makes it easier to compare peaks at a glance.

Changes in other applications

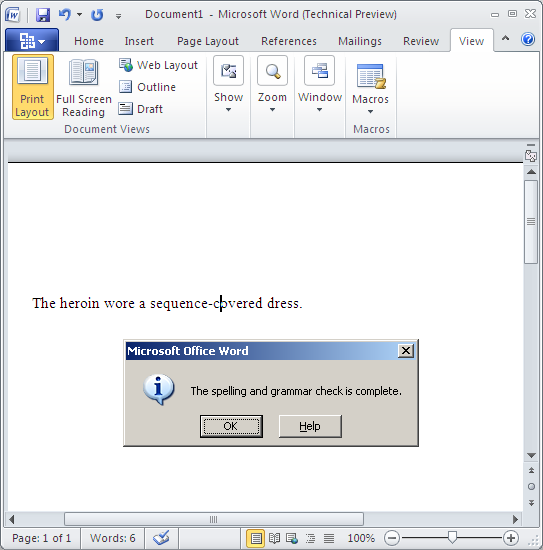

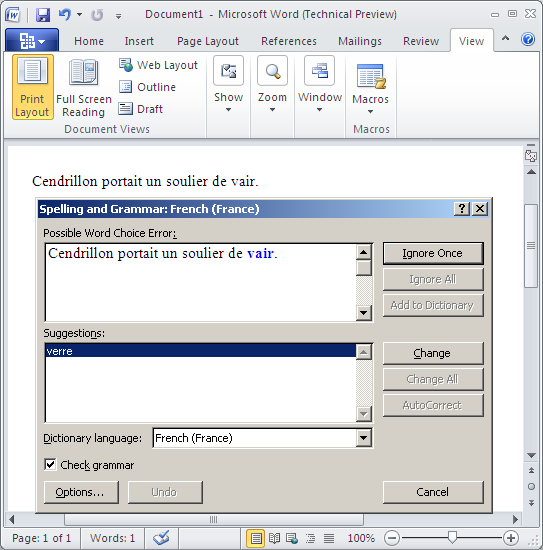

Word has advanced options for opentype fonts, for instance, if your font has several character sets, you can now access them from Word. This is especially good for distressed fonts or the excessively ornate ones. In addition to kerning, it is now possible to control ligatures (i.e. to allow users to specify how ff, fl or fi would appear on screen, as one unique glyph or as two separated letters). Another new feature of Word is an advanced spell checker who is able to warn you of possible word choice errors, when using homonyms for instance.

On my setup, these 3 options didn’t really work, but it’s a beta and I understand the intent.

The advanced spell checker didn't catch those words which were quite obviously used out of context.

In French, it picked sides in a famous spelling controversy. Many people believe that Perrault originally wrote that Cindirella wore fur slippers (soulier de vair). Microsoft sides with Disney on that one and glass slippers (souliers de verre).

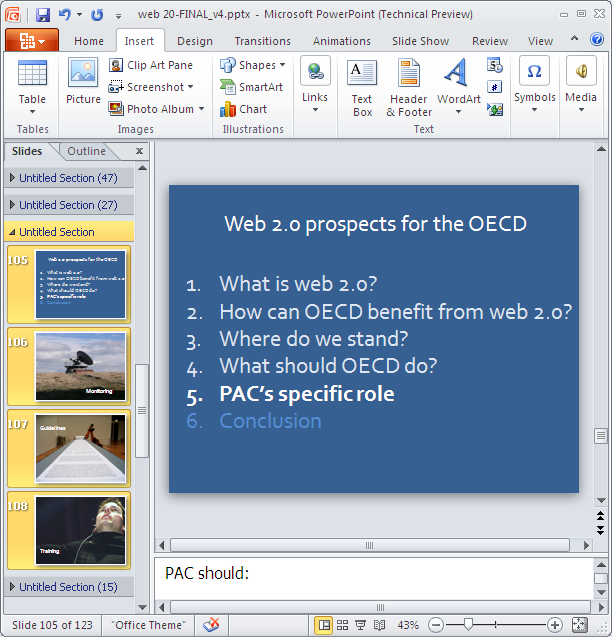

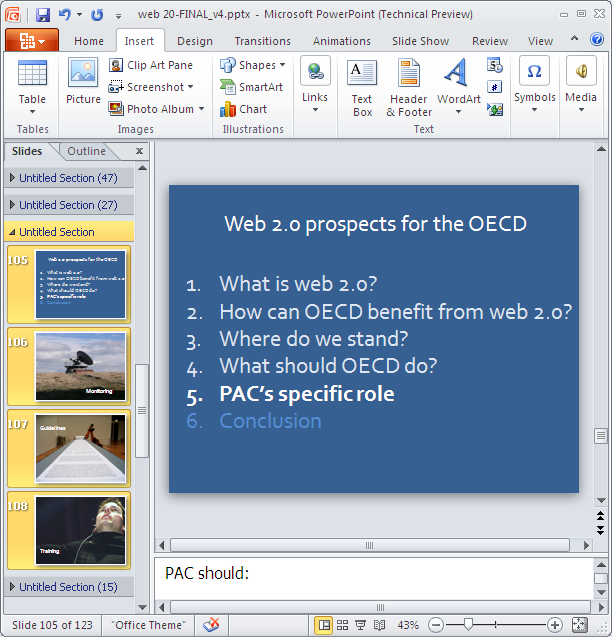

Powerpoint features 3 high-level changes: the possibility to structure a long presentation using sections, which somehow helps. However, as far as I could see, sections are only a grouping feature. There are few operations that can be performed on the section as a whole (as opposed to on all the presentation, or on each slide separately). For some tasks, you can think it is the case (as selecting the section implicitly selects its slides) but you’ll see that the operation only affected the current slide. Hmm. It can be useful to manage a presentation after it’s done, but IMO this will reduce the amount of time people spend designing their presentation away from powerpoint which is ultimately a bad thing.

Powerpoint sections make it easier to manage very long documents.

Powerpoint 2010 also features 3D transitions not unlike those of Keynote ’08. It is also possible to include movie clips in presentation. Wasn’t this already the case? Previously, you’d have to embed video files in your presentations. Now it is possible to embed online videos as well. I’m not quite sure about these two options really, the first one for ideologic reasons, the 2nd because I wouldn’t recommend any speaker to rely overly on an internet connection and a video hosting service during a live presentation.

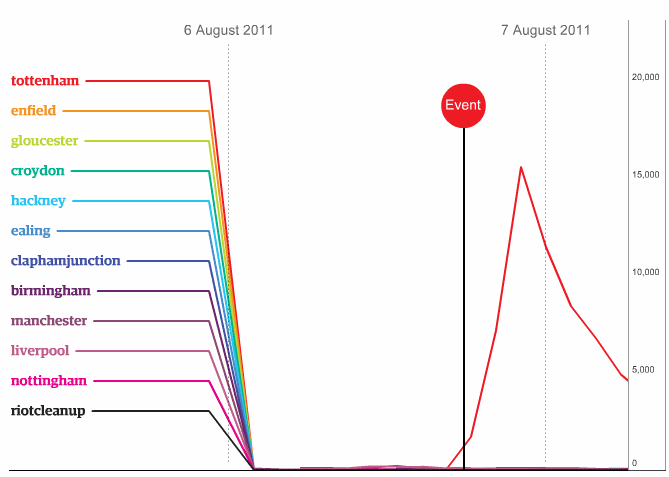

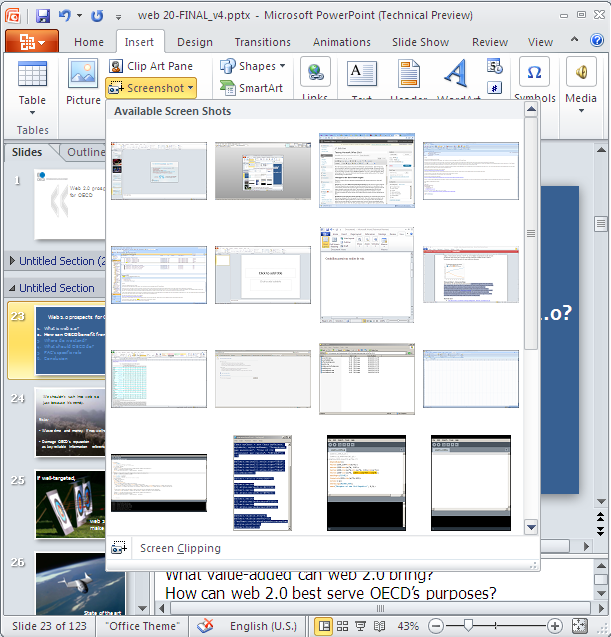

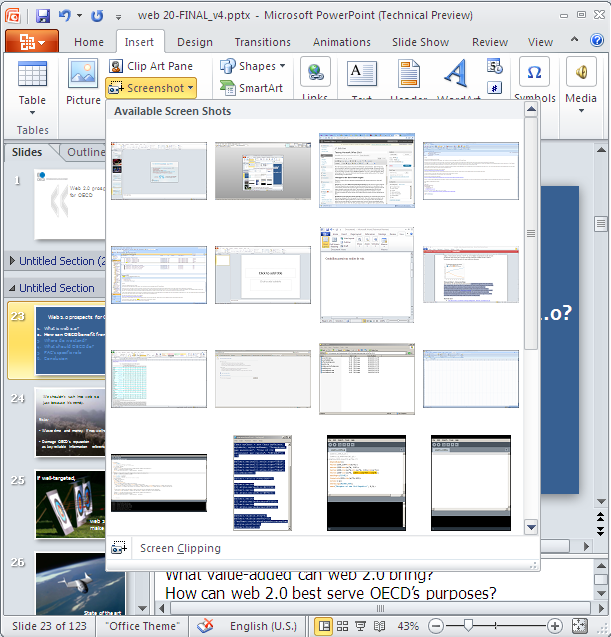

The insert screenshot shows a gallery from all my open windows to choose from. The screen clipping tool allows one to insert only a section of the window. Neat!

There’s another thing available everywhere in Office but which is possibly most useful in powerpoint, that is, insert screenshot. By clicking on this button, you have a list of thumbnails of all your open windows to choose from, this really reduces the hassle of using a screen capture tool, or worse, to manually do a screen capture, paste it in an image editing program, crop the image, save it to an acceptable format and copy/paste it again where you need it. It is possible to only copy part of these screens, too. It’ s quite well done.

Overall impressions

I’m impressed with the thinking that went into the interface. The ribbon was already a great demonstration of out-of-the-box thinking and looked great on paper. I wasn’t thrilled to use it as the commands I had been using for some 15 years were not always easily found, but it seems that first-time users of Office 2007 outweight those who’ve used previous versions. The execution of the ribbon in Office 2010 is improved, and the team allowed themselves to go beyond some arbitrary constraints they had imposed to themselves, such as the pasting options or the office button. Well done.

I’m happy that sparklines have been added to Excel. In the next few years, we’ll find even better usage for them. However, I’m disappointed that the charting options remain essentially unchanged. Take the pie chart for instance. Everyone is aware of its limitations. There are many alternatives which would be easy to implement in Excel. Also, I’m disappointed that the charting mechanism remains the same: present the user with a long list of chart types, without supporting their reasoning in the choice of one over the other. There should be a chart wizard that would ask the user what do they want to show with the data and suggests the best choice (and not many possible choices) of chart.

I am not sure about the improved spell checker. Improved means increased dependency on the tool, which is the reason why typos haven’t been eradicated despite the technology.

I am very skeptical about all the advances of the Office product into design. Office users are not designers. Or rather, to be a designer requires a specific form of critical reasoning, not a new tool. More sophisticated graphical options allow novice users to achieve complex results without going through that phase of reasoning, which ultimately won’t help them.